One of EvE’s founding hypotheses was that comprehensive pharmome data could serve as the experimental foundation for developing AI models for science. I was thrilled to see this put into practice by FutureHouse, where they leveraged EvE’s dataset as part of the training and validation of ether0, their chemistry-focused model. This work revealed an important insight: modern domain-specific AI models can be trained with remarkable data efficiency. By building on existing capabilities like AI reasoning and optimizing the approach for the task at hand, exceptional science models can be developed with data that’s within our reach.

Something that became clearer to me about modern AI (by modern I mean - in 2025!) is a way in which it’s now fundamentally different from classic machine learning (even though the underlying mathematical mechanism is not as different as people might think). Machine learning models are typically developed in isolation - based on one dataset, albeit perhaps a very large one brought together from many sources. The model is built based on what can be learned from this data, starting from scratch. Modern AI models, however, can build on the models that came before them, borrowing and adapting capabilities to the task at hand.

This is how the ether0 model was created. They started with an open base model (Mistral-24B-Instruct) – the kind that’s capable of carrying on a chat conversation. Then they used another open reasoning model (DeepSeek-R1) to generate reasoning “traces” about the type of chemistry tasks ether0 was designed for. An interesting note is that R1 (the reasoning model) had accuracy below 1% at actually carrying out these tasks. Despite this, using this output to train the base model how to reason about chemistry led to improved performance in the end. It’s only at this point, after a base AI model was trained on the output of another AI model, that data came to bear on further developing into the model. The foundation represents a tremendous set of capabilities – language, reasoning, and broad chemistry knowledge – on which to build.

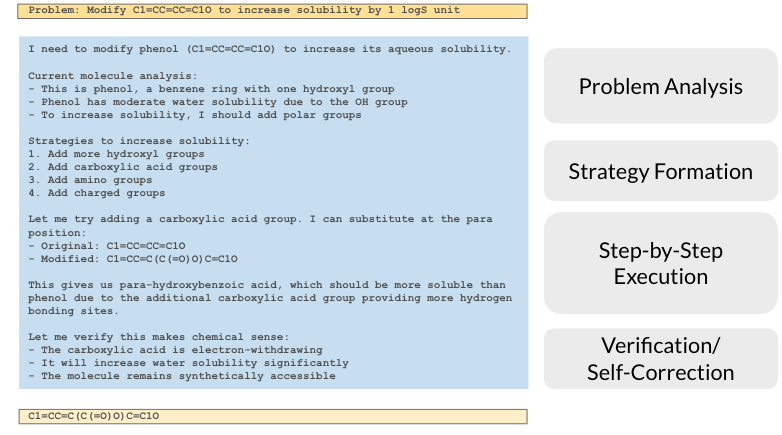

But first, what is a reasoning trace, you say? This is an example below. The reasoning model is given a chemistry task, it “thinks out loud” in a particular way to reason through the problem, then provides an answer (presumably an incorrect one in this case). It’s the thinking that’s used to train the base model to reason, by providing it with a large number of these types of outputs.

After this process, the next step was using reinforcement learning to train the model to optimize its performance on the various chemistry tasks at hand. Reinforcement learning is a process by which the AI developers design a reward function that scores the answer the model provides when given a task to accomplish. The reward function in this case requires both the right format and an accurate answer. The accuracy of the answer is evaluated based on “ground truth” data. This is where EvE comes in. Our pharmome dataset was used to give the model feedback on its performance on the receptor binding task. Given this reward function, the model is let loose to figure out how to do the task best, improving little by little over many iterations. This process, and our understanding that this is the best structure for AI training, is fascinating in itself. I’ve written more about it here.

In the end, the developers discovered that adding reasoning capabilities before carrying out reinforcement learning and distilling it all into a single model, leads to exceptional performance on some tasks but not others. They were able to tie this back to the benefit of reasoning for particular types of tasks. This indicates an important direction for future work, in which different types of models can be deployed for different tasks, optimizing the overall results.